Google's intricate web of algorithms forms the backbone of its functionality, orchestrating the seamless delivery of search results. Understanding how Google works requires delving into the complex mechanisms that power its algorithms. These algorithms, fueled by advanced machine learning and artificial intelligence, continuously evolve to provide users with the most relevant and reliable information.

Algorithms Revealed by Google

In this exploration, we'll unveil key algorithms discussed in two pivotal documents: the testimony of Pandu Nayak (VP of Alphabet) and Professor Douglas W. Oard’s Refutation Testimony, which scrutinized opinions from Google’s expert, Prof. Edward A. Fox.

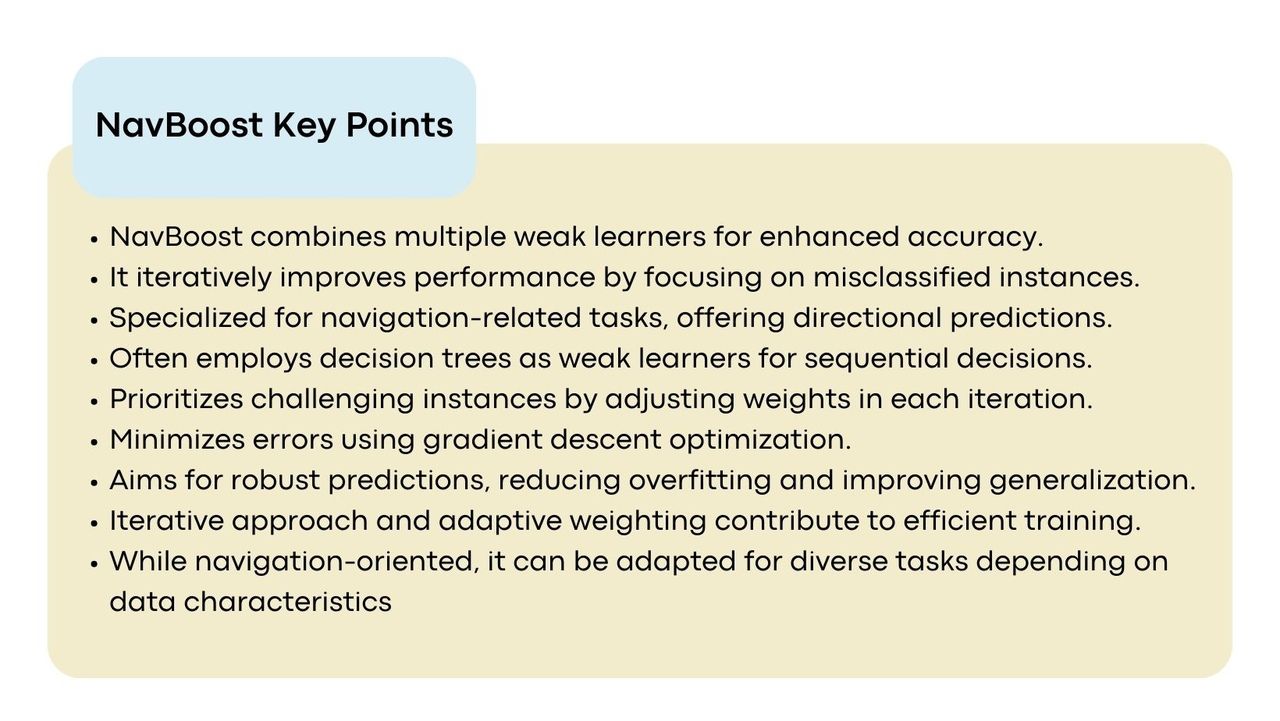

1. Navboost

Navboost stands as a crucial factor for Google, instrumental in collecting user interaction data through clicks on search results. The system leverages algorithms informed by human-made quality ratings to enhance result rankings. Google experimented with removing Navboost in the past, finding that its absence led to a deterioration in search result quality.

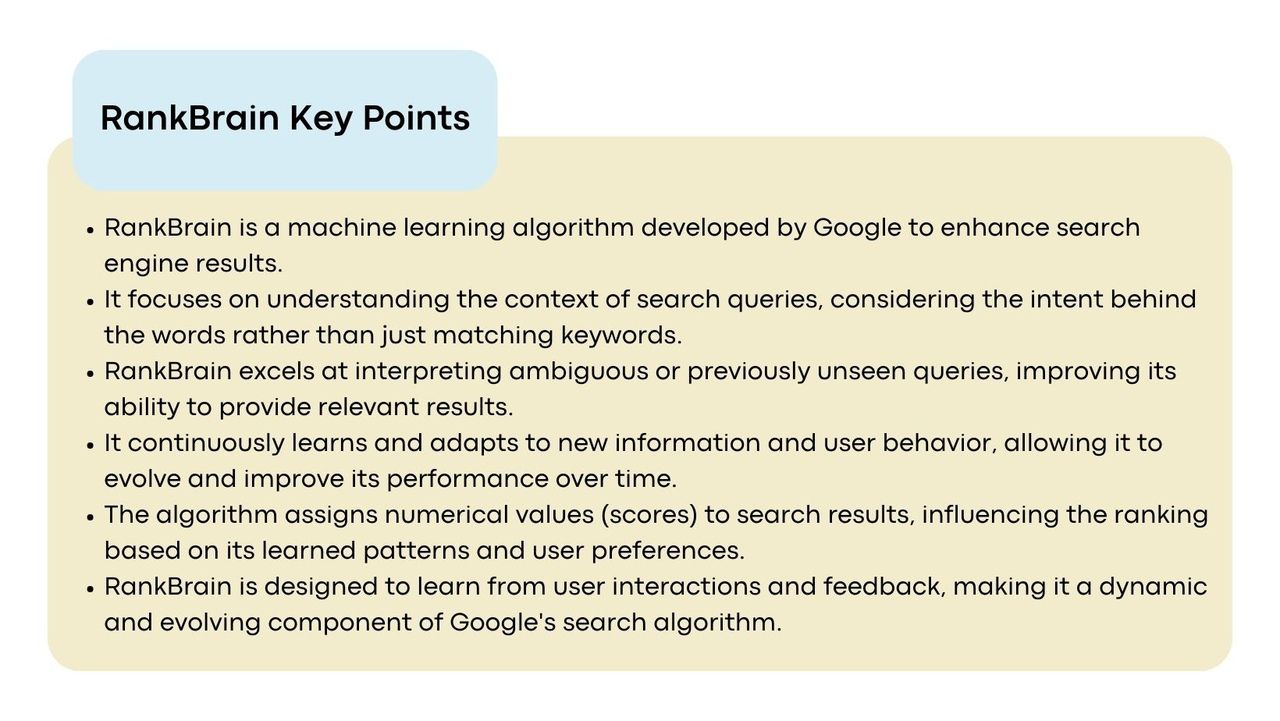

2. RankBrain

Launched in 2015, RankBrain is an AI and machine learning system integral to processing search results. It excels in understanding language nuances and user intentions, especially with ambiguous or complex queries. Utilizing Tensor Processing Units (TPUs), RankBrain ranks as the third most crucial factor in Google’s ranking, after content and links.

QBST (Query Based Salient Terms)

QBST focuses on identifying essential terms within a query and related documents to influence result rankings. It aids in recognizing critical aspects of a user’s query, which is particularly beneficial for intricate or ambiguous searches.

Term Weighting

Term Weighting adjusts the importance of individual terms within a query based on user interactions with search results. This ensures the relevance of terms within the query context, balancing results for common or rare terms.

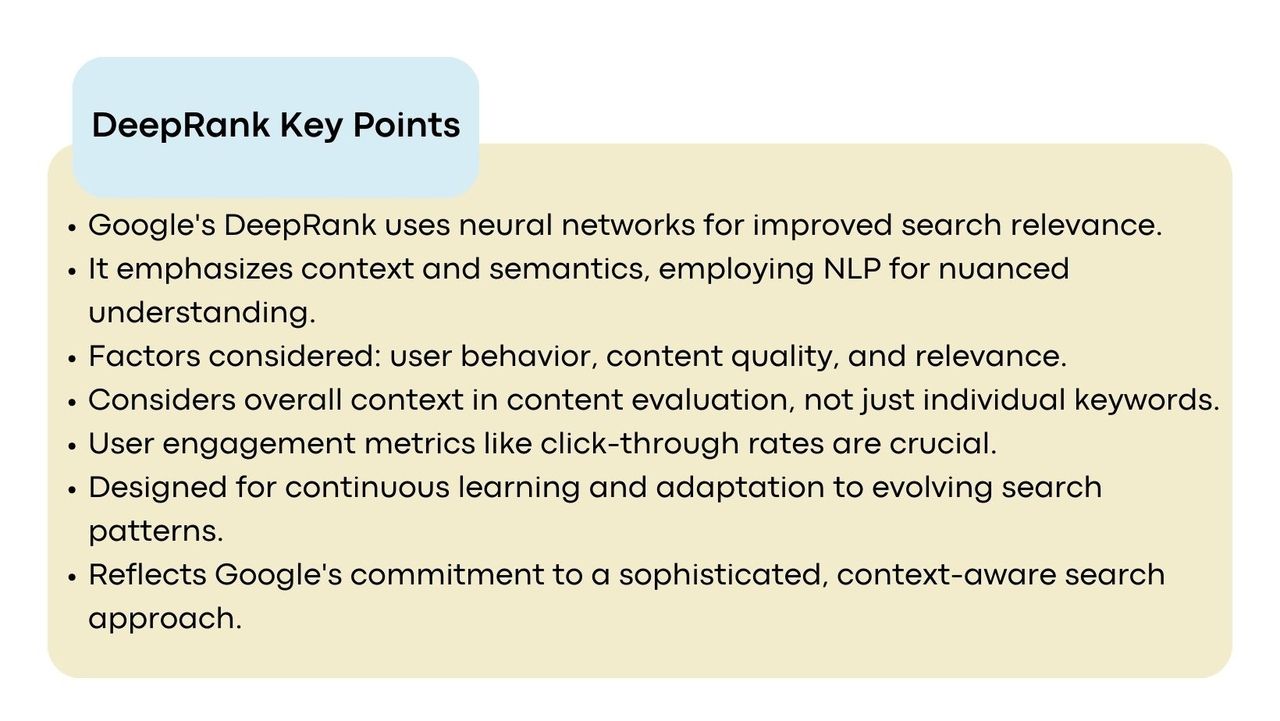

3. DeepRank (BERT)

DeepRank, internally known as BERT, delves deeper into understanding natural language, refining search results based on user feedback and clicks. Pre-training on extensive document data enables DeepRank to fine-tune results for user intent and context.

4. RankEmbed and RankEmbed-BERT

RankEmbed likely focuses on embedding relevant features for ranking, while RankEmbed-BERT integrates BERT's algorithm and structure to enhance language comprehension. RankEmbed-BERT contributes to the final ranking score, operating after the initial retrieval of results.

5. MUM

Launched in June 2021, MUM is approximately 1,000 times more powerful than BERT, understanding 75 languages and processing information in various formats. This multimodal capability allows MUM to provide comprehensive and contextual responses, minimizing the need for multiple searches.

6. Tangram and Glue

Tangram assembles the Search Engine Results Page (SERP) with data from Glue, organizing results in a user-friendly manner. They collectively consider non-textual elements like image carousels and direct answers.

7. Freshness Node and Instant Glue

Freshness Node and Instant Glue ensure current and relevant results, prioritizing recent information. This is particularly crucial for searches related to news or current events.

By combining these algorithms, Google aims to understand user queries, determine result relevance, prioritize freshness, and personalize results based on user context, such as location and device.

Metrics Used by Google to Evaluate Search Quality

In this section, we'll delve into the metrics revealed by the Refutation Testimony of Professor Douglas W. Oard and information from the "Project Veritas" leak, shedding light on how Google assesses and refines its search algorithms.

1. IS Score

Google relies on human evaluators to shape its search products, and the Information Satisfaction Score (IS score) is a key metric derived from their ratings. This score, ranging from 0 to 100, serves as a primary indicator of search quality. IS4, as of 2021, is considered a vital ranking metric, despite its acknowledged approximation of user utility. IS4@5, a derivative metric, focuses on the quality of the first five search results, excluding elements like advertising.

Limitations and Challenges with Human Evaluators

- Temporal Mismatches: Discrepancies due to differences in query, evaluations, and document times.

- Reusing Evaluations: The practice of reusing evaluations may not accurately represent current content relevance.

- Understanding Technical Queries: Evaluators may struggle with technical queries that impact assessments.

- Evaluating Popularity: Difficulty in judging popularity among competitive interpretations or rival products.

- Diversity of Evaluators: There is a lack of diversity among evaluators, who are all adults and do not reflect the user base.

- User-Generated Content: Evaluators might undervalue user-generated content, impacting its relevance.

- Freshness Node Training: Challenges in tuning freshness models due to a lack of adequate training labels.

2. PQ (Page Quality)

The mention of PQ suggests a focus on Page Quality. However, the trial documents don't provide much detail beyond acknowledging it as a metric. The primary source referring to PQ is the Search Quality Rater Guidelines, which are subject to periodic changes, making it a task for human evaluators.

This information is fed into algorithms to build models. Notably, leaked documents from "Project Veritas" propose this process. Interestingly, quality raters seem to assess pages exclusively on mobile devices.

3. Side-by-Side

This likely pertains to evaluations where two sets of search results are presented for comparison. Google previously had a tool called "sxse" that allowed users to vote on their preferred set of results. This direct feedback aided in assessing the effectiveness of different versions of the search system.

4. Live Experiments

Official information from "How Search Works" reveals that Google conducts experiments with real traffic to test new features. These experiments involve activating a feature for a small user percentage and comparing their behavior with a control group. Key metrics include clicks on results, number of searches, query abandonment, and time taken for users to click on a result.

- Position Weighted Long Clicks: Considers click duration and position on the results page, reflecting user satisfaction.

- Attention: Potentially measures time spent on a page, providing insights into user interaction and content engagement.

5. Freshness

Freshness is crucial for search results, with the Freshness Node correcting outdated scores. Microservices like Tangram and Glue, including the Freshness Node and Instant Glue, play a role in ensuring fresh content is appropriately prioritized.

Freshness Metrics

- Correlated NGrams and Salient Terms: Detecting spikes in interest or related activity.

- Unigrams (RTW): Relevant to trend detection.

- Half Hours since epoch (TEHH): Measures time.

- Knowledge Graph Entities (RTKG) and S2 Cells (S2): Enrich search with semantic understanding.

- Freshbox Article Score (RTF): Geographic indexing.

- Document NSR (RTN): News Relevance of the Document.

- Geographical Dimensions: Defines the geographical location of an event or topic mentioned in the document.

Collectively, these metrics contribute to Google's efforts to enhance search quality and user satisfaction.

Unveiling the Significance of Clicks in Google's Search Dynamics

In the intricate world of search algorithms, a closer look at internal presentations, emails, and leaks sheds light on the paramount role that user clicks play in shaping Google's search engine. From the "Unified Click Prediction" email to the intriguing "Google is Magical" presentation, the understanding emerges that clicks are not merely a casual aspect but a cornerstone of Google's continuous improvement.

It's essential to acknowledge that the primary documents under discussion predate 2016, marking a period of significant evolution for Google. Despite these changes, their strategy's essence revolves around analyzing user behavior, considering it a pivotal quality signal. The CAS MODEL, as explained in a patent, encapsulates the incorporation of clicks, attention, and satisfaction into a Search Engine Result Page (SERP) Evaluation Model.

The CAS MODEL: A Feedback Loop of Learning

Every search query and subsequent click contributes to Google's learning process, forming a perpetual feedback loop. This loop, a foundation of the CAS MODEL, enables Google to adapt and continually enhance its understanding of user preferences and behaviors. This approach underscores the illusion that Google possesses a profound comprehension of user needs.

The analysis extends beyond human evaluator ratings, with clicks offering a more intricate panorama of search behavior. The documents emphasize that while human evaluations provide a basic view, clicks uncover complex patterns, revealing second and third-order effects.

Second-Order Effects: Adapting to Emerging Patterns

Google discerns emerging patterns based on user preferences. For instance, if users consistently favor in-depth articles over quick lists, Google adjusts its algorithms to prioritize such detailed content in related searches.

Third-Order Effects: Shaping Long-Term Changes

Broader, long-term changes occur as content creators adapt to click trends. If users show a preference for comprehensive guides, content creators produce more detailed articles and fewer lists, thereby altering the nature of available content on the web.

Leveraging Click Analysis for Relevance Improvement

A specific case presented in the analyzed documents highlights how Google improved search result relevance through click analysis. Despite being surrounded by a set of 15,000 considered irrelevant documents, a few documents stood out based on user clicks, showcasing the importance of clicks in discerning hidden relevance within vast datasets.

Training with the Past to Predict the Future

Google's strategy involves training with the past to predict the future, aiming to avoid overfitting. Continuous evaluations and data updates ensure that models remain current and relevant. Localization personalization is a key aspect of this strategy, tailoring results to be pertinent for users in different regions.

While personalization is acknowledged, a more recent document from Google suggests that it rarely changes rankings and does not occur in "Top Stories." The limited use of personalization is primarily to understand search context and provide predictive suggestions with autocomplete. Google emphasizes that the query itself holds more importance than individual user data.

Challenges and Considerations

However, the click-focused approach is not without challenges, especially when dealing with new or infrequent content. Evaluating the quality of search results is a nuanced process that goes beyond a simple count of clicks.

The Struggle for Dominance: Google and Chrome in the World of Search Engines

In the competitive landscape of internet browsers and search engines, the significance of default options cannot be overstated. Behavioral economist and Caltech professor, Antonio Rangel, sheds light on the influential role of default settings in shaping user choices. Google's Chrome browser, as revealed by Jim Kolotouros, VP at Google, goes beyond being just a browser; it's a vital component in Google's quest for search dominance.

Chrome as a Gateway to Google's Ecosystem

Chrome's popularity extends beyond its user base; it serves as a gateway to Google's vast ecosystem. The integration of Chrome with Google Search, coupled with its default search engine status, provides Google with a substantial advantage in steering the flow of information and digital advertising. The collection of user data, including search patterns and website interactions, contributes crucially to refining algorithms and enhancing the precision of search results.

Antonio Rangel emphasizes that Chrome's market supremacy is not merely about popularity but about influencing how users access information and online services. The default setting of Google as the search engine in Chrome plays a pivotal role in this influence, offering Google a significant edge in the competitive digital landscape.

Default Choices: Convenience, Bias, and Privacy

While Bing may not be an inferior search engine, Google's default configuration holds sway due to the convenience it offers and associated cognitive biases. The inertia involved in changing default search engines, especially on mobile devices, amplifies the impact. With up to 12 clicks required to modify the default search engine, users often stick with the default, further reinforcing Google's dominance.

This default preference also extends to consumer privacy decisions. Google's default privacy settings, despite their significant data collection, present a barrier for users seeking more restricted data sharing. Overcoming this barrier requires awareness of alternatives, learning the necessary steps, and implementing changes—a process that introduces considerable friction. Behavioral biases, such as status quo and loss aversion, further tilt users toward maintaining Google's default options.

The Strategic Significance of Homepage Settings

Antonio Rangel's testimony aligns with internal analyses from Google. The browser's homepage setting emerges as a key factor influencing search engine market share and user behavior. Users with Google as their default homepage perform significantly more searches on Google than those without this default setting.

The influence of homepage settings varies across regions, with a more pronounced effect in Europe, the Middle East, Africa, and Latin America compared to Asia-Pacific and North America. Notably, Google appears less vulnerable to changes in the homepage setting than its competitors like Yahoo and MSN, which could suffer substantial losses if deprived of this default advantage.

The analysis underscores the strategic importance of the homepage setting for Google, serving not only to maintain market share but also as a potential vulnerability for its competitors. It emphasizes that most users do not actively choose a search engine but lean toward the default access provided by their homepage setting. In economic terms, Google stands to gain an estimated incremental lifetime value of approximately $3 per user when set as the homepage.

Conclusion

Google's search algorithms form a dynamic tapestry, evolving to enhance user experience. From Navboost to MUM, we've explored the layers powering the search giant. These algorithms decode user intent, prioritize freshness, and personalize results.

In our deep dive into Google's search metrics, we examined IS Score, PQ, side-by-side testing, and the crucial role of freshness. The balance between human evaluation and algorithms underscores Google's commitment to relevance.

User clicks play a pivotal role, in shaping the CAS MODEL feedback loop and influencing content creators. Google's architecture, revealed post-analysis, highlights the strategic use of default options, emphasizing Chrome's role in shaping user choices.

As we conclude, the battle for default supremacy remains critical in Google's quest to shape online experiences. The nuanced dance between default settings, user choices, and market dominance reveals the strategies at play in Google's digital realm.

For further reading, you might be interested in the following: